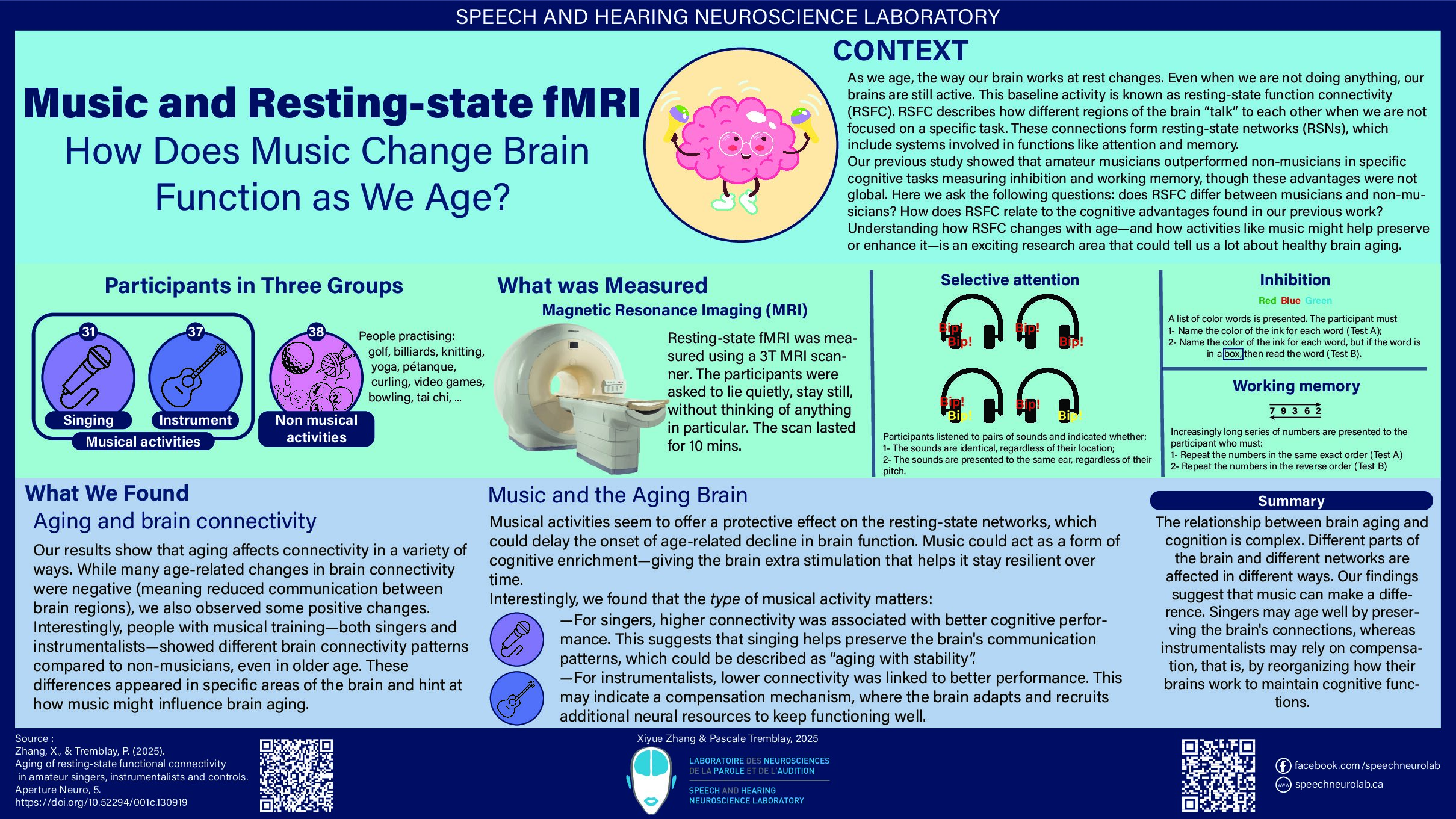

This is the fourth article in our series on speech sound processing. In previous publications, the first stages of speech processing were described, from the ear to the auditory cortex (Figure 1). When central auditory processing is successfully completed, additional levels of (linguistic) processing are required to identify and interpret speech sounds, and to understand their meaning. The speech signal therefore continues its journey inside the brain!

Figure 1. Summary of the main systems involved in speech perception.

The next big step is speech perception, during which our brain maps the acoustic speech signal to different mental representations (e.g., phonemes, syllables, words). Two main questions arise in the study of this process:

- What does the brain perceive? Or what is the nature of the fundamental linguistic units that our brain uses during speech perception (phonemes, syllables, morphemes, words)?

- How does the brain perceive? Or how does it manage to segment and identify these units?

PART A: What does the brain perceive?

Speech perception (and perception in general) is (largely) categorical: the linguistic units are divided into separate categories in our brain. This means that differences between units belonging to different categories are perceived more easily than differences between items belonging to the same category.

This phenomenon also occurs in the visual system. For example, the rainbow presents a continuum of all the colors visible to the human eye; yet, what we perceive is a set of juxtaposed bands of colors (e.g., red, orange, yellow, green, blue, indigo, violet).

Perception is different from sensation! It is an interpretation of reality.

Our brain uses categories, each of which with distinct properties – but what is the fundamental nature of these categories? The smallest unit of linguistic representation is the phoneme. The phoneme is a speech sound (a vowel or a consonant) with unique properties. These properties, also called phonological features or distinctive features, reflect how we position the organs and muscles of the mouth and the vocal folds to produce different speech sounds. This ability resembles that of a musical instrument, which can produce different sounds by changing the configuration of the fingers. For example, the words cap (/kæp/) and tap (/tæp/) are distinguished by their initial phonemes (/k/ and /t/), which only differ in terms of place of articulation – in this case, the position of the tongue in the mouth when the sound is produced. Try producing the words cap and tap in a loop, and pay attention to the position of your tongue. It should be noted that the system of phonemes (or distinctive sounds) differs from one language to another. Our perception of phonemes is better for the sounds of the languages we know!

Studies suggest that speech perception does not rely solely on these so-called auditory (phonological) representations. Indeed, to support the process of speech perception, our brain calls on other linguistic representations (e.g., morphemes, words), visual representations (the visible movements of the lips) and articulatory representations (the position of organs and other structures that allow us to produce speech sounds). For example, the McGurk effect, which we discussed in another post, reveals that our brain uses lip reading to perceive speech, although we are often not aware of it (click here to try the experiment): in sum, it is easier to perceive speech when it is both auditory and visual.

PART B: How does the brain perceive?

Speech perception involves two important processes: the chopping of the signal into smaller units, called segmentation, and the identification of these units.

1. Speech segmentation

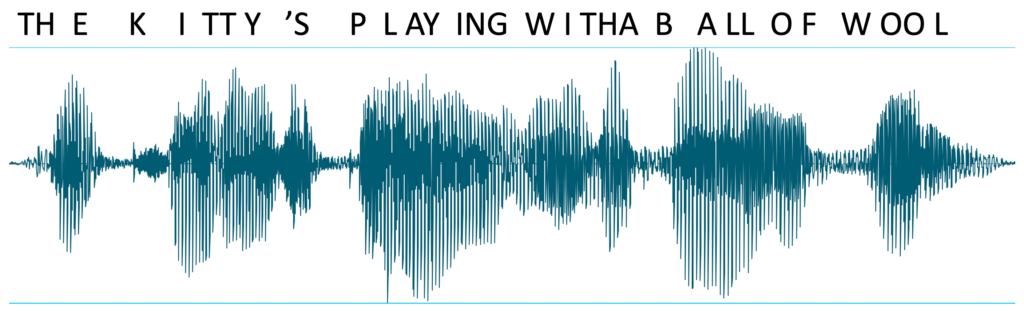

Unlike writing, spoken words are produced in a continuous stream, although we are often under the impression that we hear separate words (see Figure 2). Speech perception is thus the result of the laborious task of defining the relevant boundaries within the extremely swift speech stream. This challenge is more obvious when learning a new language, because we do not have the same expertise in terms of segmentation, or when hearing an unknown language, which appears terribly fast to us, because we are not able to extract the words from it.

Figure 2. This oscillogram represents the sentence The kitty is playing with a ball of wool. The letters are placed approximately above their actual position in the acoustic signal. Although this signal seems to be divided into sections, these sections do not correspond at all to the words.

Studies have suggested that from the first months of life, children can distinguish sounds and sequences of sounds (e.g., syllables, words) using a variety of acoustic cues, including intonation, stress, and the frequency at which sequences of sounds are produced in the environment (perception is said to be “statistical”). All this information is used by babies and young children to learn new words.

2. Speech identification

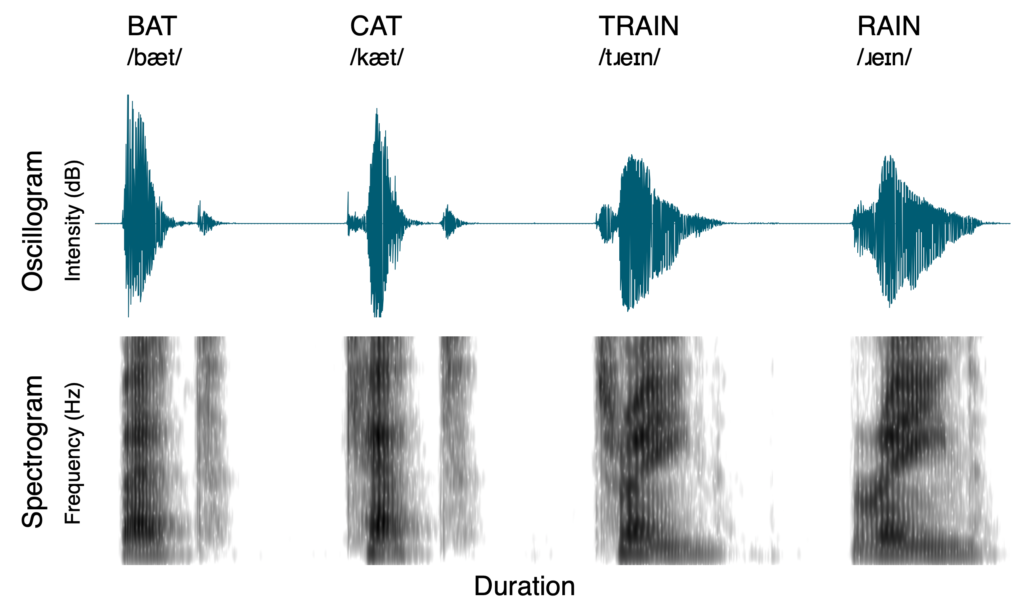

Due to our brain’s high efficiency in understanding language, one might think that the process of identifying words is simple. Yet, acoustic signals are complex, as exemplified below (Figure 3).

Figure 3. The top section (an oscillogram) allows us to observe changes in intensity (louder or quieter) over time, while the bottom section (a spectrogram) illustrates the intensity of sounds (the darker the line, the stronger the intensity), and the spectral composition (lower frequencies towards the bottom and higher frequencies towards the top) over time. More examples can be found in this article.

It can be seen in Figure 3 that words such as train and rain are very similar acoustically (intensity, duration, frequency), despite not having the same number of phonemes. Segmenting and identifying each portion of the signal therefore represents a major challenge! This analysis must also take into account the great variability of the acoustic signal, i.e., the fact that the same sound can be produced in multiple ways depending on the characteristics of the speaker (e.g., the pitch, accent, intonation, emotion), the phonological context (e.g., the preceding and following phonemes), the communication context (e.g., type of speech act, such as shouting vs reading aloud), and any type of event that could interfere with the acoustic signal, such as the presence of background noise or a simple moment of inattention. All these elements must be considered by the brain to correctly interpret the speech signal.

This difficulty is reflected in the challenge that voice recognition systems have faced (and still do) in recent decades, due to an underestimation of the great variability of the acoustic signal. Indeed, although we know a lot about the properties of phonemes, which have been integrated into recognition systems, these “digital” representations of sounds were clearly insufficient to precisely identify sounds and words in different recording contexts and by different people.

So how is the brain able to do all that? A key element is that the brain makes predictions about what has been heard, based not only on many sensory and contextual cues, but also by relying on years of accumulated knowledge. Speech perception is therefore the product of a thorough analysis of a set of cues, and repeated exposure to language, across a wide variety of contexts and speakers. The brain develops an expertise by forming abstract representations relevant to the processing of speech sounds to which it has been exposed. The brain can then identify speech even when the auditory information is incomplete, by calling on a multitude of networks and mechanisms. The phonemic restoration effect exemplifies this skill: when part of the acoustic signal is ambiguous or “blurred” (e.g., when listening to speech in the presence of noises), our brain can mentally “restore” the missing information. You can check this perceptual illusion in the video below:

Voice recognition systems have greatly improved with the use of artificial intelligence. These systems are now trained through exposition to large corpora of speech, which improves their ability to decipher what was produced.

PART C: Networks involved in speech perception

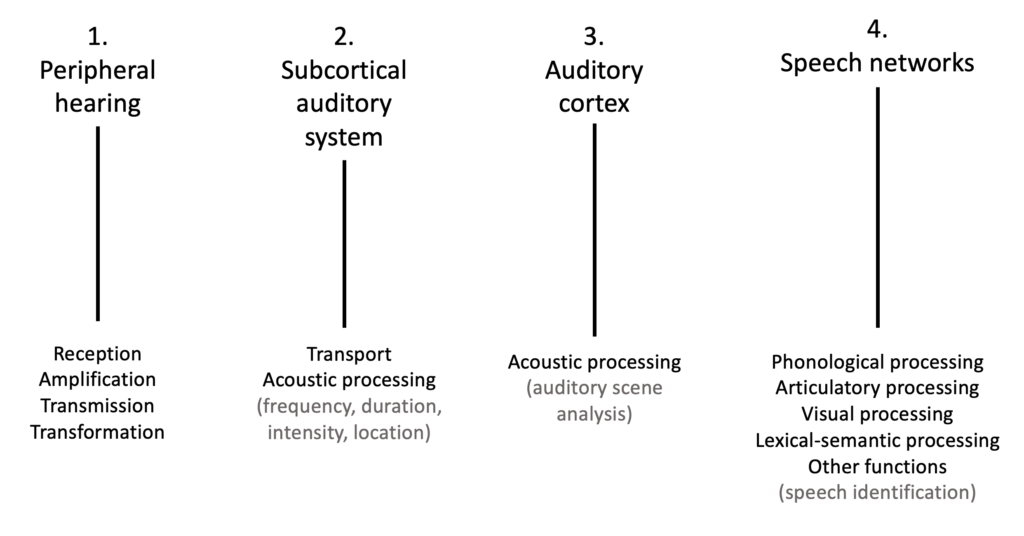

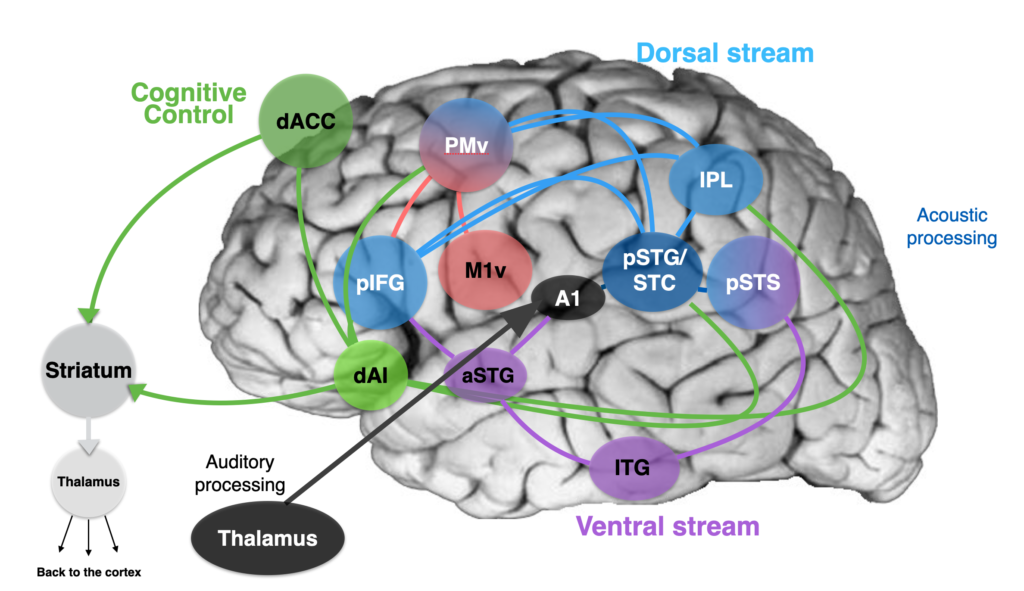

In the past 40 years or so, brain imaging and brain stimulation techniques have contributed to a better understanding of the neurobiological mechanisms underlying speech perception. As mentioned earlier, the interpretation of the speech signal is difficult and requires the participation of a variety of interacting systems (Figure 4 below).

The superior temporal cortex (including the superior temporal gyrus and the superior temporal sulcus) is classically identified as a key region for speech perception. The auditory abstract representations of speech (known as “phonological” representations) are believed to be stored in the posterior region of this cortex.

As discussed above, several levels of representations are engaged during speech processing. Indeed, the regions associated with visual processing (occipital lobe), multimodal processing (inferior parietal lobule or IPL) and articulatory processing (premotor cortex or PMv, primary motor cortex or M1) also contribute to the identification of speech sounds through the dorsal speech stream (Figure 4).

The regions associated with semantic processing (access to the meaning of words, sentences, discourse) also participate in the perception of speech (temporal pole, middle temporal gyrus and inferior temporal gyrus or ITG) through the ventral speech stream. Semantic representations allow our brain to decipher words that have been spoken based on the context and on our mental lexicon (i.e., our mental “dictionary”). The inferior frontal gyrus (IFG) is another key region for speech and language processing. It is involved in phonological processing and phonological memory (posterior part) and lexical access (anterior part).

Finally, regions involved in cognitive and executive functions (e.g., short-term verbal memory, memorization of heard sounds, or attention; shown in green in Figure 4) are also engaged during speech processing, including the cingulate gyrus, insular cortex, striatum, and thalamus.

Figure 4. This figure illustrates the various networks that are involved in speech perception, as well as their complex interactions. When speech is heard, preliminary acoustic processing takes place in the thalamus and in the auditory cortex (in black) (see previous articles: the subcortical auditory system; the auditory cortex). The dorsal stream (in blue) includes a set of regions that contain abstract representations of speech that contribute to the identification process. In the ventral stream (in purple), semantic processing is performed; access to word meanings and prior knowledge of words also contribute to deciphering speech. © Pascale Tremblay

To summarize, speech perception involves multiple interacting networks and different abstract linguistic representations that allow us to understand speech, despite the variability in the acoustic signal and listening conditions.

References:

Jusczyk, P.W., Houston, D.M., & Newsome, M. (1999) The beginnings of word segmentation in english-learning infants. Cogn Psychol. 39(3-4):159-207. doi: 10.1006/cogp.1999.0716. PMID: 10631011.

Schomers, M. R., & Pulvermüller, F. (2016). Is the Sensorimotor Cortex Relevant for Speech Perception and Understanding? An Integrative Review. Front Hum Neurosci, 10, 435. doi:10.3389/fnhum.2016.00435

Other articles in this series:

The peripheral auditory system (article 1 of 4)

The central subcortical auditory system (article 2 of 4)

The auditory cortex (article 3 of 4)

Additional suggested readings: