You like testing illusions? We invite you to experience an illusory phenomenon linked to the perception of speech!

To start the experiment, click on the video.

The “McGurk” phenomenon was first described in 1976 (McGurk & MacDonald, 1976) and reproduced by many research teams throughout the world, with speakers of various languages. Since then, many research teams have attempted to understand how audiovisual integration works: when does it occur? Is it compulsory? Does it change with age? What brain regions are involved?

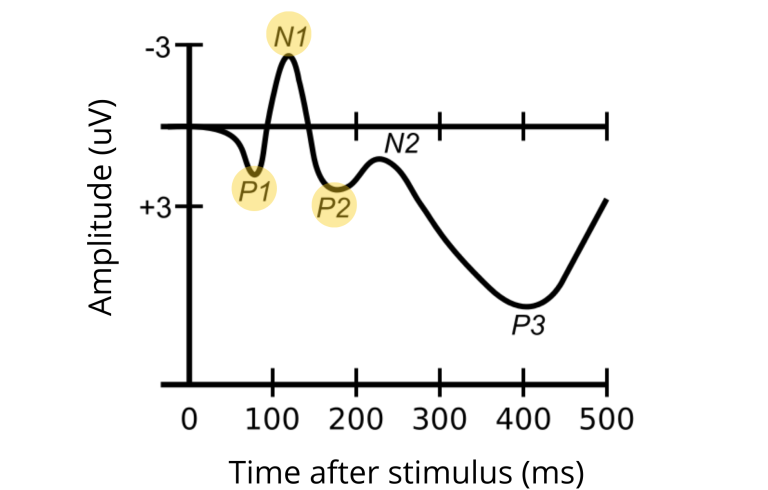

Many research teams have studied the phenomenon of audiovisual integration with electroencephalography (EEG), a technique that measures the electrical activity of the brain using electrodes placed on the scalp. EEG measures evoked potentials, which are responses of the central nervous system to stimulation (e.g., to a sound heard). The evoked potentials can be observed on a plot called the electroencephalogram (Luck, 2014). Some of these potentials are associated with audiovisual integration, such as the potentials forming the P1-N1-P2 complex (see figure 1). This complex consists of positive and negative deflections peaking at approximately 50 ms (P1), 100 ms (N1) and 200 ms (P2) after the onset of a stimulus, like a sound. This series of auditory evoked potentials indicates that sound has reached the auditory cortex and that acoustic-phonetic processing has been initiated, that is, that the processing of sound characteristics such as pitch (± high) or duration (± long), which distinguish the sounds of speech (e.g. the /p/ vs. the /ch/ sound), has been initiated. It is possible to characterize the evoked potentials as a function of their latency (time between the appearance of the stimulus and the “peak” of the evoked potential, measured in milliseconds) and amplitude (“height” of the evoked potential, measured in microvolts or µV – a microvolt is one millionth of a volt; this is very low electrical activity!).

Figure 1. Diagram illustrating the P1-N1-P2 complex (ERP components), adapted from Choms, under licence CC BY-SA 3.0.

It is well established that, compared to auditory perception alone, adding visual articulatory information decreases the amplitude and latency of the N1/P2 complex (e.g. Besle et al., 2004; Klucharev et al., 2003 ; Treille et al., 2014a ; Treilleet al., 2017 ; Treille et al., 2014b ; Treille et al., 2018, van Wassenhove et al., 2005). Therefore, this complex is generally regarded as a reliable marker of audiovisual integration. In a study led by Pascale Tremblay, the laboratory director, we examined the audiovisual integration process in elderly people by measuring the P1-N1-P2 complex. Our results showed that the P1 component, which marks basic auditory processing, is not affected by age. For components N1 and P2, despite some changes, this complex, and the integration work it reflects, remains quite functional with age. However, the ability to read lips is greatly reduced (Tremblay et al., 2021). Other research teams have observed that the McGurk effect worked better in older compared to younger people, suggesting that the use of auditory and visual information to perceive speech changes with age (Hirst et al., 2018; McGurk & MacDonald, 1976; Sekiyama et al., 2014).

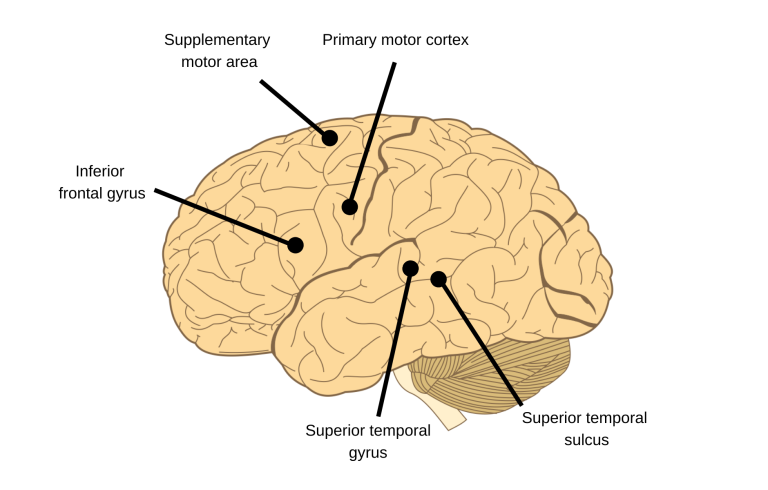

As EEG does not provide precise information on the regions of the brain involved in audiovisual integration, several teams have used magnetic resonance imaging (MRI) and neurostimulation to identify the brain regions involved in this process, to complement EEG studies. For a meta-analysis on the regions of the brain involved in the integration of audiovisual information during speech perception, see Erickson et al. (2014a). These regions include the bilateral posterior temporal regions, including the superior temporal sulcus (STS) and the superior temporal gyrus (Beauchamp et al., 2010; Nath & Beauchamp, 2012; Erickson et al., 2014a-b; see figure 2). For example, when the functioning of the left STS is inhibited in participants using transcranial magnetic stimulation (TMS), the likelihood of perceiving the illusion is reduced, suggesting that this region plays an important role in audiovisual integration (Beauchamp et al., 2010). In addition, neural activity within the left STS, as measured using functional MRI, is associated with individuals’ sensitivity to the McGurk effect: people with lower activity in this region are less likely to perceive the illusion (Nath & Beauchamp, 2012), and thus, less capable of integrating auditory and visual information.

Figure 2. Localization of certain brain regions that may play a role in audiovisual integration (human brain), adapted from James.md.mz, licensed under CC BY-SA 3.0.

Other regions are also believed to play a role in audiovisual integration, such as the inferior frontal gyrus or IFG (Erickson et al., 2014a; see figure 2). A research team observed in participants a greater activity in the bilateral inferior frontal gyrus when the auditory and visual information was discordant (e.g. audio / ba / combined with visual / ga /), in comparison to when auditory and visual information matched (e.g. audio / ba / combined with visual / ba /; Murakami et al., 2018).This region -the IFG- could therefore be important in resolving inconsistencies between auditory and visual information when perceiving speech. This hypothesis is supported by the finding that greater activity in the left IFG is associated with a lower likelihood of the illusion occurring (Murakami et al., 2018).

Motor areas – those that control our movements – such as the primary motor cortex or the premotor area, are also believed to be involved in audiovisual integration during speech perception (Erickson et al., 2014a, Skipper et al., 2007; see figure 2). For example, one study observed that TMS applied to the region of the motor cortex that controls the lips decreased the likelihood that the illusion would occur when auditory and visual information was discordant (Murakami et al., 2018). This study, and other similar studies (e.g. Sato et al., 2009; Tremblay & Small, 2011 – see also the book chapter by McGettigan & Tremblay, 2017), suggest that regions of the brain that are used to produce speech are also used to perceive speech, although their specific role in audiovisual integration remains unclear.

In short, several regions of the brain, in the temporal and frontal lobes help integrate auditory and visual information during speech perception and could help produce the McGurk effect!

Here are some other illusions to try, also reflecting the McGurk effect:

In these two videos, the same audio recording is used, containing the sound /ba/. However, the articulation (visual) differs between the two videos. This is why you might have heard the /va/ sound in the first video and the /da/ sound in the second, even though the aural information is actually the same (/ba/).

Suggested Reading:

Références :

Beauchamp, M. S., Nath, A. R., & Pasalar, S. (2010). fMRI-guided transcranial magnetic stimulation reveals that the superior temporal sulcus is a cortical locus of the McGurk effect. The Journal of Neuroscience, 30(7), 2414–2417. DOI: 10.1523/JNEUROSCI.4865-09.2010

Besle, J., Fort, A., Delpuech, C., & Giard, M.-H. (2004). Bimodal speech: Early suppressive visual effects in human auditory cortex. European Journal of Neuroscience, 20(8), 2225–2234. DOI: 10.1111/j.1460-9568.2004.03670.x

Erickson, L. C., Heeg, E., Rauschecker, J. P., & Turkeltaub, P. E. (2014a). An ALE meta‐analysis on the audiovisual integration of speech signals. Human Brain Mapping, 35(11), 5587–5605. DOI: 10.1002/hbm.22572

Erickson, L. C., Zielinski, B. A., Zielinski, J. E. V., Liu, G., Turkeltaub, P. E., Leaver, A. M., & Rauschecker, J. P. (2014b). Distinct cortical locations for integration of audiovisual speech and the McGurk effect. Frontiers in Psychology, 5, Article 534. DOI: 10.3389/fpsyg.2014.00534

Klucharev, V., Möttönen, R., & Sams, M. (2003). Electrophysiological indicators of phonetic and non-phonetic multisensory interactions during audiovisual speech perception. Cognitive Brain Research, 18(1), 65–75. 10.1016/j.cogbrainres.2003.09.004

Luck, S. J. (2014). An introduction to the event-related potential technique: MIT press.

McGurk, H., and MacDonald, J. (1976). Hearing lips and seeing voices. Nature 264, 746–748. DOI: 10.1038/264746a0

Murakami, T., Abe, M., Wiratman, W., Fujiwara, J., Okamoto, M., Mizuochi-Endo, T., Iwabuchi, T., Makuuchi, M., Yamashita, A., Tiksnadi, A., Chang, F.-Y., Kubo, H., Matsuda, N., Kobayashi, S., Eifuku, S., & Ugawa, Y. (2018). The motor network reduces multisensory illusory perception. The Journal of Neuroscience, 38(45), 9679–9688. DOI: 10.1523/JNEUROSCI.3650-17.2018

Nath, A. R., & Beauchamp, M. S. (2012). A neural basis for interindividual differences in the McGurk effect, a multisensory speech illusion. NeuroImage, 59(1), 781–787. DOI: 10.1016/j.neuroimage.2011.07.024

Sekiyama, K., Soshi, T., & Sakamoto, S. (2014). Enhanced audiovisual integration with aging in speech perception: A heightened McGurk effect in older adults. Frontiers in Psychology, 5, Article 323. DOI: 10.3389/fpsyg.2014.00323

Skipper, J. I., van Wassenhove, V., Nusbaum, H. C., & Small, S. L. (2007). Hearing lips and seeing voices: How cortical areas supporting speech production mediate audiovisual speech perception. Cerebral Cortex, 17(10), 2387–2399. DOI: 10.1093/cercor/bhl147

Treille, A., Cordeboeuf, C., Vilain, C., & Sato, M. (2014a). Haptic and visual information speed up the neural processing of auditory speech in live dyadic interactions. Neuropsychologia, 57, 71–77. DOI:10.1016/j.neuropsychologia.2014.02.004

Treille, A., Vilain, C., Kandel, S., & Sato, M. (2017). Electrophysiological evidence for a self-processing advantage during audiovisual speech integration. Experimental Brain Research, 235(9), 2867–2876. DOI: 10.1007/s00221-017-5018-0

Treille, A., Vilain, C., & Sato, M. (2014b). The sound of your lips: Electrophysiological cross-modal interactions during hand-to-face and face-to-face speech perception. Frontiers in Psychology, 5, Article 420. DOI: 10.3389/fpsyg.2014.00420

Treille, A., Vilain, C., Schwartz, J.-L., Hueber, T., & Sato, M. (2018). Electrophysiological evidence for Audio-visuo-lingual speech integration. Neuropsychologia, 109, 126–133. DOI: 10.1016/j.neuropsychologia.2017.12.024

van Wassenhove, V., Grant, K. W., & Poeppel, D. (2005). Visual speech speeds up the neural processing of auditory speech. PNAS Proceedings of the National Academy of Sciences of the United States of America, 102(4), 1181–1186. DOI: 10.1073/pnas.0408949102

Further reading:

Tiippana, K. (2014) What is the McGurk effect? Frontiers in Psychology, 5, DOI:10.3389/fpsyg.2014.00725