Imagine you are in a busy social event. Conversations are flowing around you as you are talking with a friend, then suddenly you hear your name being said across the room. How is it you can listen to and understand your friend whilst also hearing a conversation across the room? This is a prime example of a phenomenon called “the cocktail party effect”. Understanding speech in the presence of background conversation is a challenge for all of us, but especially children and older adults. In this blog post, we discuss what the cocktail party effect is, and some of the cognitive mechanisms involved.

When in a busy social event, there are many different conversations and noises being produced simultaneously. With all this noise, one could, and perhaps should, get overwhelmed with this much information. This assumption is partially correct. Humans have a limited capacity to process information at once. In the seminal paper “Some Experiments on the Recognition of Speech, with One and with Two Ears,” in which Colin E. Cherry (1953) first described the cocktail party effect, this idea of limited capacity was discussed. Cherry found that when we hear two messages simultaneously, we are unable to gather meaning from either message unless their content is distinct.

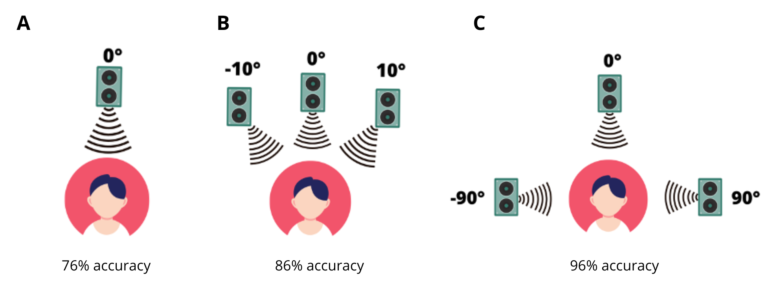

Expanding on the work of Cherry, researchers have found that when voices are spaced out (e.g., three speakers located at 10 degrees, 0 degrees, and -10 degrees in front of a listener, Figure 1B) it is easier to understand a simultaneous message than if all voices are coming from one direction (Figure 1A) (Spieth et al., 1954, cited in Aarons, 1992). A larger difference in spatial separation between the voices (e.g., 90 degrees, 0 degrees, and -90 degrees) results in people being able to repeat back the attended message more accurately (Figure 1C). One possible explanation is that separation allows us to more easily select a stream to attend to.

Maurizio Corbetta and Gorden L. Shulman (2002) at Washington University developed a dual process model of how weselect the focus of our attention. The first process of this model consists of goal-directed attention (top-down attention). For example, your goal is to hear your friend at a crowded party, thus more attention will be dedicated to the conversation. This process is supported by brain areas in the dorsal fronto-parietal network. When we focus our attention on a goal (e.g., a conversation), this network may filter out irrelevant information to the task and cause this information to be unattended. However, when information is unattended this does not mean we do not hear it. Lee M. Miller in the Department of Neurobiology at the University of California (2016) suggests that we give different levels of attention to multiple signals. For example, if your goal is to have a conversation, the person you are speaking to will be given a high level of attention and the low consistent noise of a washing machine will be filtered out. By dedicating low levels of attention to background noise, we are able to better focus on the task (e.g., the conversation). And yet, in this background noise, unattended signals can break our focus and gain our attention.

The second process in Corbetta and Shulman’s model of selective attention is associated with involuntary attention (“bottom-up” attention). For example, imagine again that you are speaking to your friend at a party then someone shouts your name, your attention will be directed towards that signal. This process is supported by brain areas in the right ventral frontoparietal network. Corbetta & Shulman suggest that this network acts as a circuit breaker directing our attention to something that is behaviourally relevant outside of what we are focussing our processing on. When these areas detect an unattended signal, they will reorient our attention. When this network detects an infrequent stimulus or a stimulus out of the ordinary which is relevant to us, the activation in this network increases. For example, since your name relates to you and is unexpected, areas in this network could increase in activation and trigger a shift in attention.

Visual Information

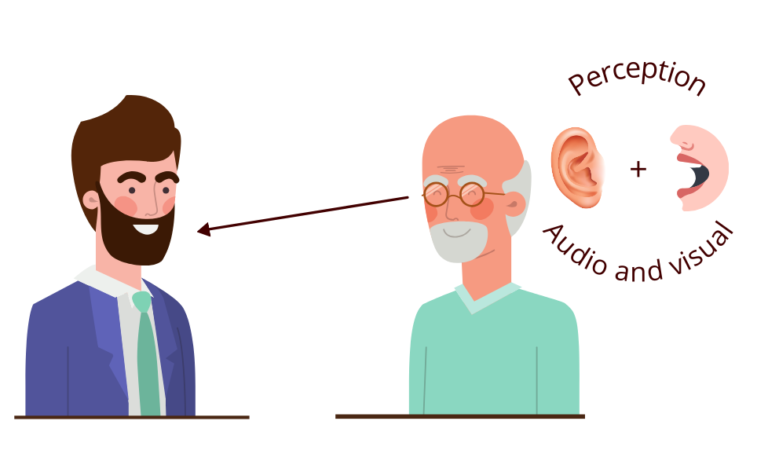

When in a loud environment (e.g., a cocktail party) with a lot of different signals, your visual system is essential to process a conversation. Visual speech processing refers to the ability to process the movements of the mouth, jaw, lips and (to some extent) tongue while looking at a speaker. This information helps understand speech, especially in challenging situations such as a cocktail party.

The best example of visual information aiding in speech perception is known as the McGurk-McDonald effect (1976), often referred to as the McGurk effect. The McGurk effect was developed by Harry McGurk and John MacDonald in 1976 at the University of Surrey. These effects teach us that visual information can alter the way we perceive sounds. For example, when you are presented with the sound “ba” but the visual stimulus “ga” you are likely to hear the sound “da”, that is, a sound that was neither heard nor seen, but which represents a combination of the auditory and visual stimuli. This effect is caused by integration between the auditory and visual processes (Figure 3). For a more detailed look at the McGurk effect see our blog post.

Individual Differences in Speech Production

When you are struggling to understand what your friend is saying due to the loud background noise, the way your friend articulates can give insight into what they are saying. Inversely, discussing with a stranger will be more challenging.

Indeed, we all speak in a unique way; these differences (for example, a person’s accent, intonation, speech rate, way of producing certain sounds, language tics) make understanding speech a difficult task. Our brains must find a way to process these interindividual differences. Shannon Heald, Serena Klos, and Howard Nusbaum (2016) from the University of Chicago suggest that we can take into account previous experiences to help our understanding. For example, your friend has a regional accent in which they rarely pronounce the “t” sound; you can use this knowledge to help process their speech in a loud environment. So, at a loud cocktail party, it is easier to discuss with a known person than a person you are less familiar with!

Maintaining Context

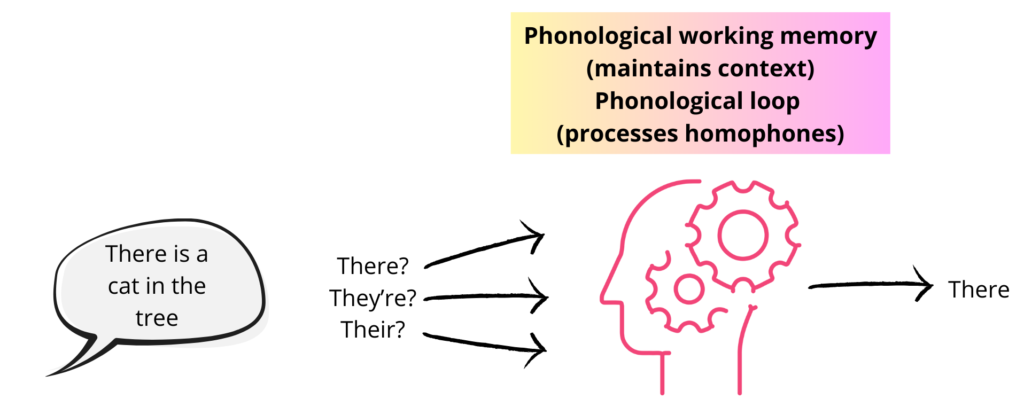

Another factor to consider is how we take into account the context of the word whilst processing. For example, take the commonly used words “there” “they’re”, and “their”. These words all sound and are articulated the same, however, depending on the context, you will be able to differentiate between them almost automatically (Figure 2).

Phonological working memory is used to maintain speech sounds or words in memory while listening to a sentence (Perrachione, Ghosh, Ostrovskaya, Gabrieli & Kovelman, 2017). This allows us to retain the context of the sentence which helps with understanding. Alan D Baddeley and Graham J. Hitch from the University of York have suggested that there are two processes that allow us to differentiate between homophones; these processes form what Baddeley and Hitch call the phonological loop. The first process is essential for maintaining information. It allows us to rehearse on or several words in our heads and to break them down to understand each syllable and each phoneme. The second process is used to make judgments on homophones (e.g., they’re or there). These two processes work together to determine which homophone is correct is a specific context (Figure 2).

Transition Probability

When talking to a friend in a busy social event, the background noise will degrade the speech stream. This degradation of the speech stream can cause us to struggle to understand individual words. So how are we able to break up this stream to understand what our friend is saying? Our brain uses a type of information that is referred to as “transition probabilities”. That is, we calculate how likely one syllable is to follow or precede another. Each language in the World possesses its own set of statistics. A low probability usually indicates the end of a word; this information can be used to decode the speech stream.

For example, the probability that the phoneme “q” is followed by a “u” is extremely high in English, so it can be assumed they are part of the same word. Whereas the probability that the phoneme “d” is followed by the phoneme “f” within the same word is very low in English, therefore it can be assumed that this is the start of a new word (Dal Ben, Souza & Hay, 2021). Findings from our lab suggest that the left anterior and middle planum temporale areas of the brain may be recruited for the predictive aspects of the process (Tremblay, Baroni & Hasson, 2012). Using transition probability can help us break down the speech stream and can help us restore the missing signal caused by the loud environment.

Conclusion

The cocktail party effect occurs thanks to numerous different processes acting simultaneously. More and more work is being done to explore the intricate details of these processes as well as factors that can affect these processes, such as age, hearing and vision, language background and many others. In our lab, we investigate how age and musical experience affect the perception of speech in noise and methods to reduce this effect such as brain stimulation.

Références :

Arons, B. (1992). A review of the cocktail party effect. Journal of the American Voice I/O Society, 12(7), 35-50.

Cherry, E. C. (1953). Some experiments on the recognition of speech, with one and with two ears. Journal of the Acoustical Society of America, 25, 975-979.

Corbetta, M., & Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nature reviews neuroscience, 3(3), 201-215.

Dal Ben, R., Souza, D. D. H., & Hay, J. F. (2021). When statistics collide: The use of transitional and phonotactic probability cues to word boundaries. Memory & Cognition, 1-11.

Heald, S., Klos, S., & Nusbaum, H. (2016). Understanding speech in the context of variability. In Neurobiology of language (pp. 195-208). Academic Press.

McGurk, H., & MacDonald, J. (1976). Hearing lips and seeing voices. Nature, 264(5588), 746-748.

Miller, L. M. (2016). Neural mechanisms of attention to speech. In Neurobiology of language (pp. 503-514). Academic Press.

Perrachione, T. K., Ghosh, S. S., Ostrovskaya, I., Gabrieli, J. D., & Kovelman, I. (2017). Phonological working memory for words and nonwords in cerebral cortex. Journal of Speech, Language, and Hearing Research, 60(7), 1959-1979.

Tremblay, P., Baroni, M., & Hasson, U. (2013). Processing of speech and non-speech sounds in the supratemporal plane: Auditory input preference does not predict sensitivity to statistical structure. Neuroimage, 66, 318-332.