An important facet of the work that we do in the lab is to study spoken language, in particular voice and articulation—the sensorimotor components of language—as well as their aging. We have already introduced the components of speech through a trumpet analogy; today we describe the analyzes we perform to study voice and speech!

To assess spoken language, we record people when they speak. Depending on the specific research question, these recordings can take several forms:

- Sustained vowel production (for voice analysis);

- Production of syllable sequences (mainly for the analysis of articulation and speech rate, but also voice and resonance);

- Repetition of syllables or words presented via headphones (for the analysis of articulation, lexical access, and phonological processing);

- Generation of words from a letter or an image (for the analysis of articulation, lexical access, and phonological or semantic processing);

- Text reading (mainly for articulation analysis, but also voice and resonance);

- Narration of a personal story (mainly for the analysis of articulation, speech rate and prosody, but also voice and resonance measurements, as well as the analysis of word choice, word complexity and sentence structure and multiple measures of discourse quality and efficiency).

The preparation for the analyses begins during the participant’s visit to the lab. Regardless of the type of recording that we make, we must make sure that a good quality signal is picked up by the microphone, that it is loud enough, but not too loud, that there is no background noise nor crackling and that the sampling rate is high (typically 44,000 Hz, that is, 44,000 measurements per second). To ensure that the position of the microphone remains constant throughout the recording, we use headset microphones. The recordings are made in our large soundproof room (figure 1), which allows us to obtain high-quality recordings without background noise.

Figure 1. Our soundproof room.

After collecting data, the analyses are conducted in the computer room. It is a meticulous and time-consuming process that includes several stages, detailed below.

1- Cleaning and definition of sections of interest

Once the recordings have been made, they are saved in high resolution (typically 24 bits quantization, which provides 144 dB of dynamic range) on our secure server following a strict protocol for naming the files. The first stage of analysis is cleaning. We ensure that the recordings do not contain instructions from the experimenters, and that laughter, coughing, throat clearing, and other noises are removed.

Next, we use acoustic analysis freeware (Praat) to identify the parts of the recording that will be analyzed, i.e., we identify the start and the end of the recordings.

2- Voice analysis

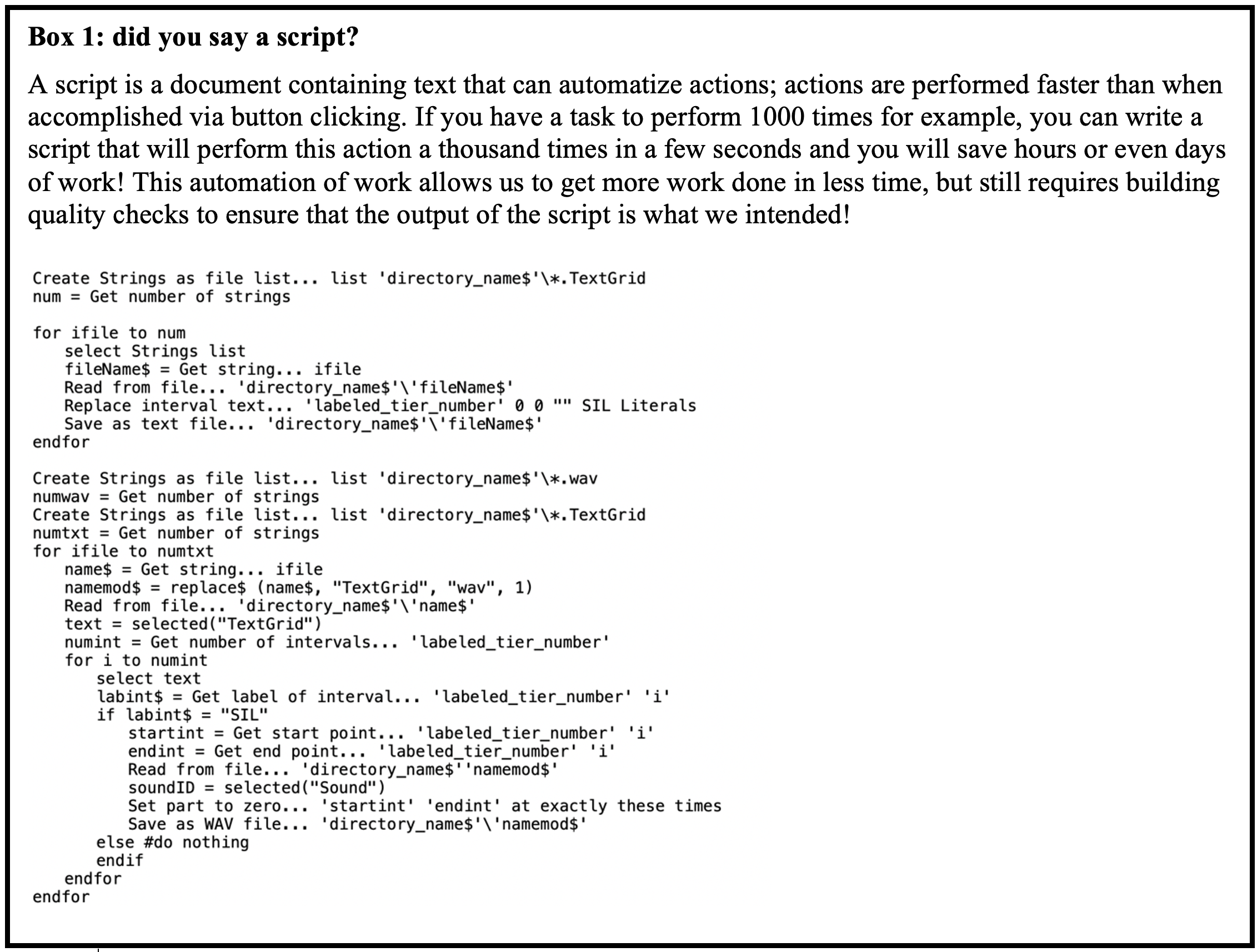

To analyze the voice (phonation), Praat analysis scripts (see Box 1) are used to extract different measurements from the segmented recordings:

- Measurements of the pitch of the voice (high or low) such as the fundamental frequency (which measures the pitch of a person’s voice), the maximum and minimum pitch of the voice (measured in Hertz, which measures a person’s ability to control their pitch).

- Voice loudness measurements, such as minimum, maximum, and average voice loudness (measured in decibels).

- Other measures such as “jitter” and “shimmer” measure vocal stability, and are related to the degree of stability of pitch and loudness respectively.

3- Transcriptions

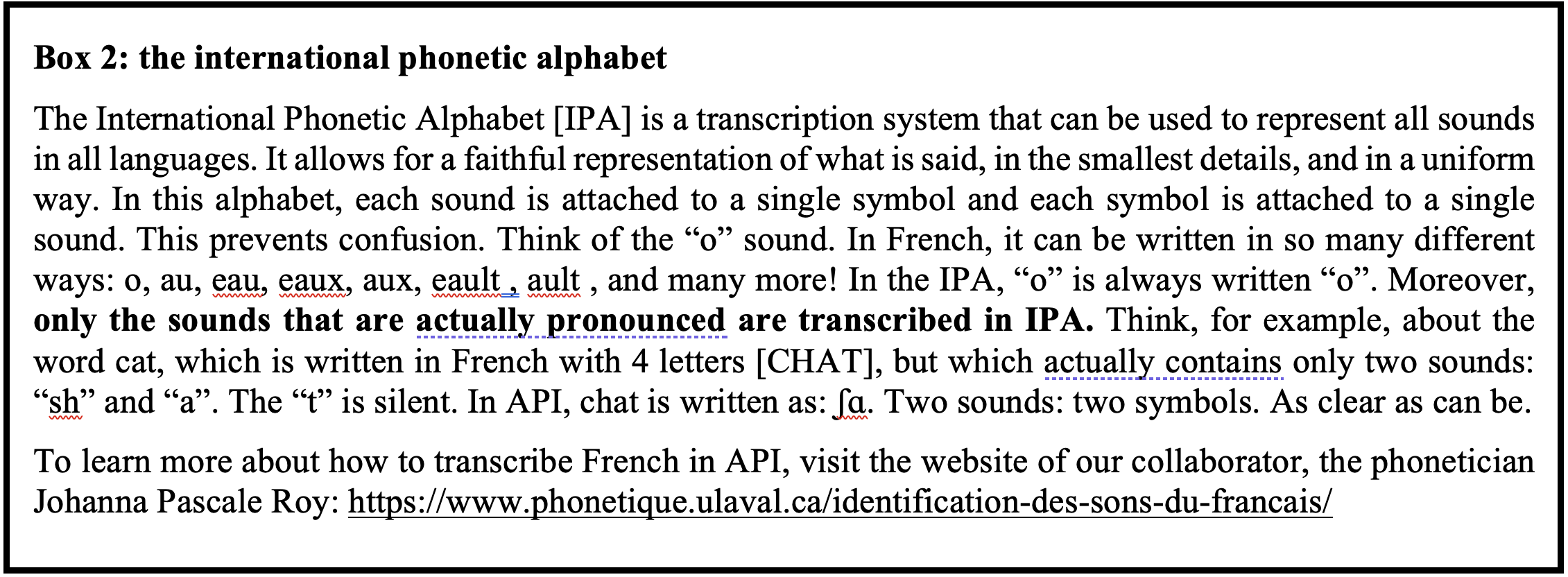

A transcription of what is said is usually produced. This transcription is carried out either directly in phonetic alphabet (see Box 2) or else it is first transcribed using the conventional French alphabet, before being converted to the phonetic alphabet. To ensure that this transcription is accurate, it is always done by two people, independently, and then we compare the two transcriptions to establish a consensus transcription.

4- Segmentation

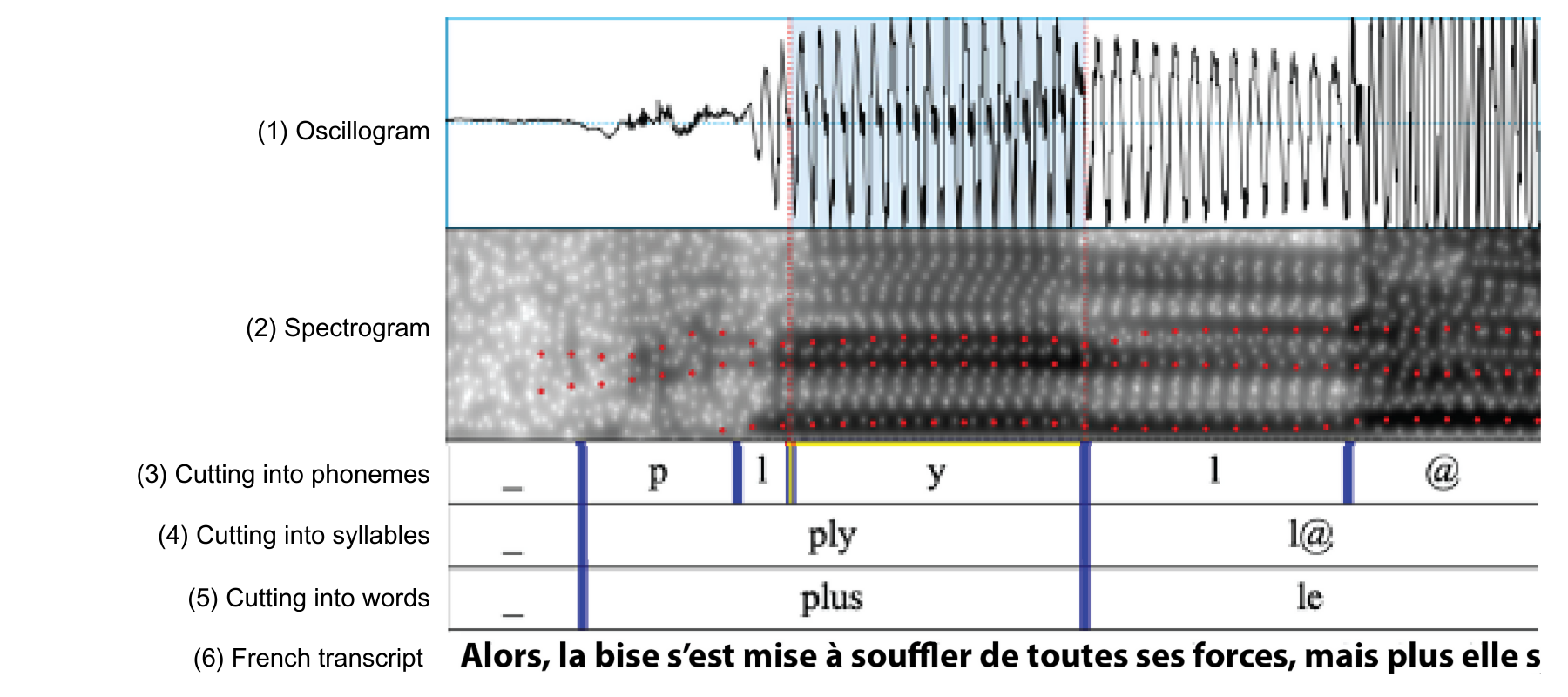

Next we use another script that cuts the recording into words, syllables and even phonemes (consonants and vowels) (See Figure 2). We then have to check the entire recording to make sure that the segmentation is adequate, and we correct it carefully when it is not. Indeed, even if the analysis scripts are powerful, speech is so variable that no automatic analysis is perfect!

Figure 2. Example of a clipped record.

5- Speech analysis

These segmented recordings are used to examine the errors, sound by sound. This step is performed by at least two people who will independently determine, using an analysis grid, the type of error for each incorrect sound. Is it an inversion of phonemes, as in “maraconi” instead of “macaroni”? Or the insertion of additional sound? As in “flish” instead of “fish”? Or a simplification? As in “umbella” instead of “umbrella”?

The analysis of speech also includes the measurement of speech rate, which represents the number of syllables or words produced per second as well as the coefficient of variation (which quantifies the regularity of speech rate over time). Additional metrics include the duration of vowels and/or consonants, as well as the duration of silences, which provides further information about the speech rate.

In several projects, we also calculate response time, that is, the time a person takes to initiate the production of a syllable or a word. This tells us about the preparation time required. The more complex or rare the item is, the longer the response time. For example, it takes longer to prepare the word “wheelbarrow” than the word “table”, because table is simpler and more frequent. Depending on the nature of the task, response time will tell us about articulatory preparation, lexical selection , or other processes at play.

6- Phonetic analysis

The final step is phonetic analysis, i.e., the analysis of the acoustic realization of consonants and/or vowels. For example, the analysis of the spectral composition of vowels, the time it takes for the vibration of the vocal cords to be visible and many other characteristics. This information is extracted with scripts and verified manually. These analyses document the articulatory difficulties of the speakers (if any) as well as their idiosyncrasies. To develop the best possible phonetic analyses, we regularly collaborate with phoneticians: Johanna-Pascale Roy at Université Laval, and Anna Marczyk from the Université de Toulouse in France.

In short, the analysis of spoken language is detailed, meticulous, and time-consuming! Projects including this type of measure generally stretch over several months, even several years!

To learn more about our published projects on spoken language, visit our website:

For voice analysis:

Lortie , C., Rivard , J., Thibeault , M., * Tremblay, P. (2016) The moderating effect of frequent singing on voice aging. Journal of Voice.

Lortie , CL., Thibeault , M., Guitton , MJ, Tremblay, P. (2015) Effects of age on the amplitude, frequency and perceived quality of voice. Age. 37(6):117

Lortie , CL , Thibeault , M., Guitton , MJ, Tremblay, P. (2018) Age differences in voice evaluation: from auditory-perceptual evaluation to social interactions. Journal of speech, language, and hearing research, 61(2): 227-245.

Speech:

Marczyk, A., Roy, J-P., Vaillancourt, J. *Tremblay, P. (2022) Learning transfer from singing to speech: Insights from vowel analyses in aging amateur singers and non-singers. Speech Communications, 141, 28-39.

Frazer-McKee, , Macoir, J., Gagnon, L., Tremblay P. (2020). Towards a standardized manual annotation protocol for verbal fluency data. Conference paper: Linguistic Annotation Workshop XIV, p. 160–166

Tremblay, P. Poulin, J. , Martel-Sauvageau, V., Denis , C. (2019) Age-related deficits in speech production: from phonological planning to motor implementation. Experimental gerontologist.

Tremblay, P. , Deschamps, I., Bédard , O., Tessier, M.-H., Carrier, M., Thibeault , M. (2018) Aging of speech production, from articulatory accuracy to motor timing. Psychology of Aging, 33(7):1022-1034.

Tremblay P, Sato M. Deschamps I. ( 2017). Age-related differences in speech production: an fMRI study of healthy aging. Human Brain Mapping (5):2751–2771.

Bilodeau-Mercure M. , & Tremblay P. (2016) Impact of aging on sequential speech production: articulatory and physiological factors. Journal of the American Geriatric Society, 64(11):e177-e182. doi : 10.1111/jgs.14491.

Tremblay P, Deschamps I. (2015). Structural brain aging and speech production: a surface-based brain morphometry study. Brain Structure and Function, 221(6), 3275-3299.

Bilodeau-Mercure M, Kirouac V, Langlois N, Ouellet C, Gasse , I., Tremblay P. (2015). Movement sequencing in normal aging: speech, oro-facial and finger movements. Ages , 37(4), 37-78.

Suggested readings: