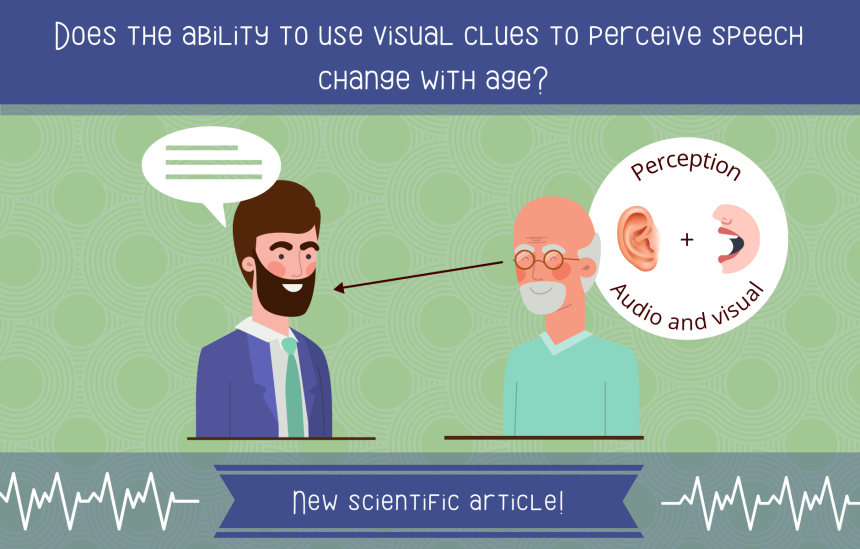

The influence of visual information on speech perception is well known. For example, when we are in a noisy environment, lipreading makes it easier to understand speech.

In this context, our brain combines the auditory and visual information related to speech, which corresponds to the phenomenon of audiovisual integration. A concrete example of this phenomenon is the McGurk effect.

Our laboratory’s director, Pascale Tremblay, along with her French collaborators Marc Sato, Anahita Basirat, Serge Pinto and carried out a research project in which they studied this phenomenon. More specifically, they studied the impact of different types of visual cues on the ability to perceive speech, and examined whether the process of audiovisual integration changes with age.

Good news for the team: the scientific article presenting the results of this study has just been accepted for publication in the journal Neuropsychologia! We are taking this opportunity to provide you with a summary of the study and its results.

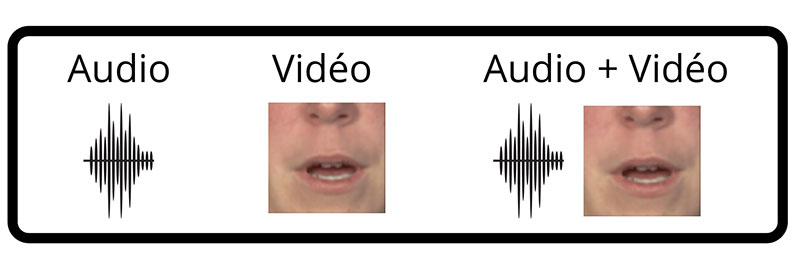

In the study, 34 people (17 young adults between 20 and 42 years old, as well as 17 older adults between 60 and 73 years old), undertook a speech perception test during which syllables (pa, ta or ka) were presented to them, one after the other. Syllables were presented in three sensory modalities: auditory only, visual only (video of a person pronouncing the syllable, but without sound), or audiovisual (combined audio and video), as shown in Figure 1.

Figure 1. Illustration of the three syllable presentation modalities during the speech perception test.

The participants had to indicate which of the three syllables had been presented by pressing a button. Hints were provided to the participants on a portion of the trials. For example, a written syllable could appear onscreen for a short period of time, which indicated what syllable was played. The participant’s performance on the test was measured. The participants’ neurophysiological responses related to the perception of speech (and more specifically, the P1-N1-P2 complex) were also measured using electroencephalography, by means of electrodes placed on the scalp.

Here is a summary of the main results:

- The ability of the elderly to read lips is significantly reduced when compared to young adults.

- Visual information (video of the syllable being pronounced) helped both the younger and the older group to perceive speech, but the benefit afforded by the visual information was greater in the young adult group.

- Visual cues (for example, the appearance of written syllables) facilitated speech perception in a similar manner in both young and older adults. Thus, the ability to integrate auditory and visual information seems to be preserved in older people, despite their reduced ability to read lips.

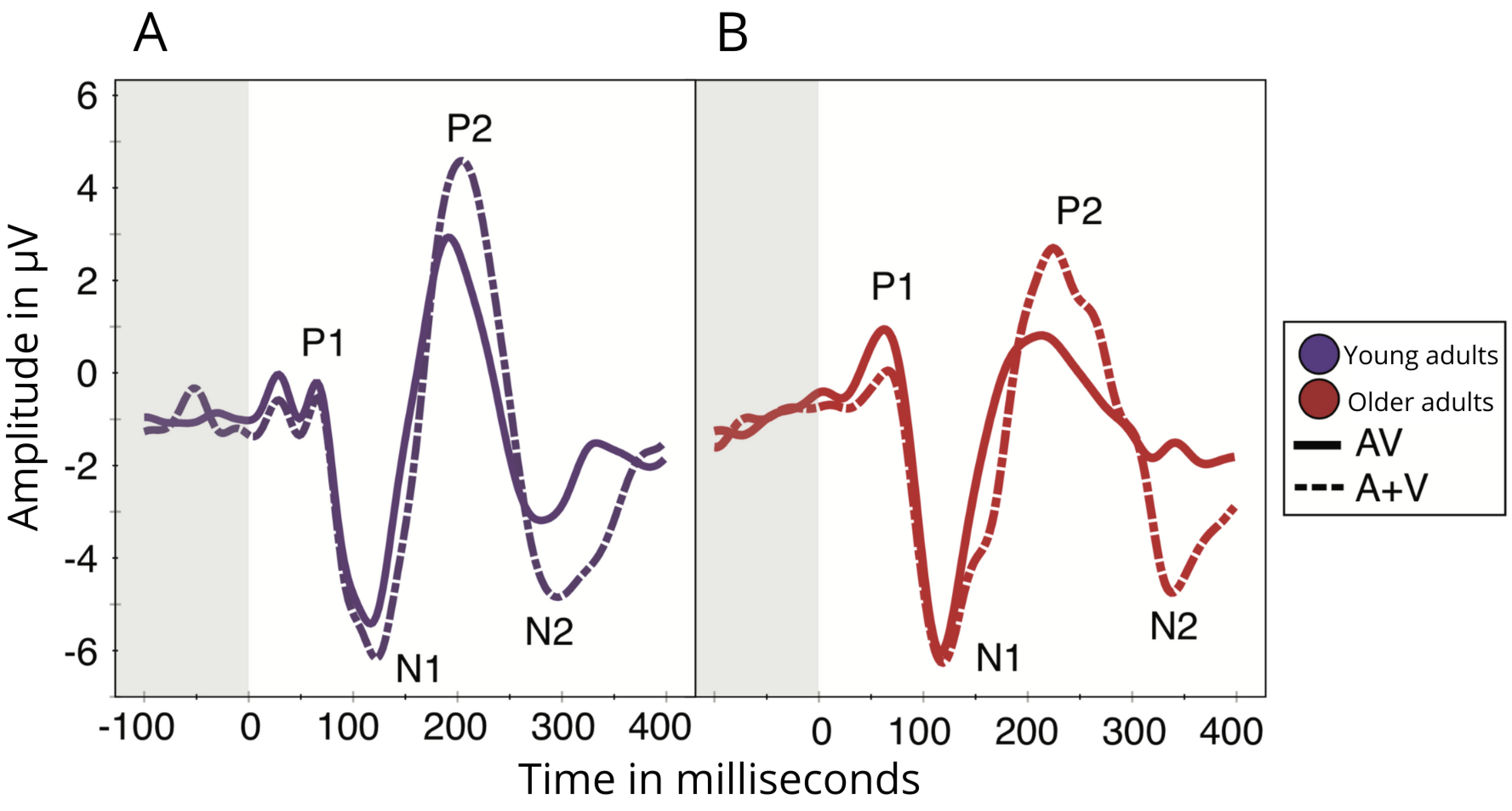

- The neurophysiological responses differed between younger and older adults (e.g. the peaks of the P2 and N2 evoked potentials, illustrated in figure two, were reduced in amplitude, and they occurred later in the older adult group when compared to the younger group), which indicates that age influences the cerebral activity related to the perception of speech. The processes most affected were the late integration processes, not the early auditory processes.

- In the elderly, the neurophysiological responses—specifically, the amplitude of the P2 evoked potential—were associated with the performance in the speech test.

Figure 2. Illustration of the neurophysiological responses measured by EEG in young adults (A) and in older adults (B) during the speech perception test.

In short, our study shows that age influences speech perception and audiovisual integration. The results provide interesting avenues for the development of interventions aimed at improving the elderly ability to perceive speech, which could, for example, target the ability to process visual information (e.g. lipreading) and integrate this information with auditory information to compensate for hearing loss, for example.

Link to the article: *Tremblay, P., Pinto, S., Basirat, A., Sato, M. (2021) Visual prediction cues can facilitate behavioural and neural speech processing in young and older adults. Neuropsychologia, 159: 1-17.