Speech Perception in Noise: Facilitatory Mechanisms of the Peripheral Auditory System

Introduction

Everyday life in modern societies bombards us with a constant array of noises varying in intensity that can disrupt communication. Indeed, from the moment we wake up, the noise from the coffee machine, household appliances, water flowing from the tap or shower, or even the hair dryer can impede speech comprehension. Similarly, when we leave the house, the noise of the wind, car engines, buses, honking, and construction sites can interfere with the sound source we wish to hear. Some people are fortunate to work in a quiet, noise-free environment, but many workers must protect their hearing against potentially dangerous noise sources for their auditory health, which increases the challenge of speech comprehension. These challenges even follow us into our leisure activities and outings. Just think of the communication difficulties one may encounter in pubs or restaurants during peak hours where, often, background music is added to the noise of chatter produced by multiple simultaneous conversations, and to all the little ambient noises, such as footsteps, doors opening and closing, and dishes being placed on tables. We can also think of the communication difficulties encountered during large gatherings such as conferences or sporting events. In short, communication difficulties caused by the presence of noise can occur in almost all spheres of human activity.

But how do we manage to understand speech despite all these sound barriers?

That is a question worth delving into. In this article, we propose to explore this issue by discussing the biological mechanisms of peripheral hearing that enable speech comprehension in noise. As opposed to central mechanisms that involve the brainstem and brain, peripheral mechanisms involve the structures of the outer ear, middle ear, inner ear, and auditory nerve. We will therefore examine the mechanisms specific to each of these structures that enhance our ability to perceive speech in noise. In the second post of this series, we will present the central mechanisms that serve the same purpose.

To sharpen your knowledge about the central auditory system in preparation for our next article, we invite you to consult our previous articles that have addressed this topic:

The central subcortical auditory system

https://speechneurolab.ca/en/the-cocktail-party-explained/

The auditory cortex

https://speechneurolab.ca/en/le-cortex-auditif/

The cocktail party explained

https://speechneurolab.ca/en/the-cocktail-party-explained/

Let us first look at how difficulties in speech perception in noise affect people around the world.

It is estimated that 5 to 15% of referrals to specialized hearing clinics, such as audiology clinics, are motivated by difficulties in speech perception in noise. These difficulties, long associated with age-related hearing loss (presbycusis), are now known to occur even in people without hearing loss. Here are some examples:

- A study conducted in the United Kingdom in 1989 reported that about 14% of people aged 17 to 30 years and 20% of those aged 31 to 40 years experienced difficulties in speech perception in noise even though a small proportion of them had a hearing loss (Davis, 1989).

- A study in Finland in 2011 reported that about 60% of adults aged 54 to 66 years experienced significant difficulties in speech perception in noise despite having normal hearing acuity (Hannula et al., 2011).

- A study in the United States in 2015 reported that 12% of adults aged 21 to 67 years with normal hearing acuity complained of difficulties in speech perception in noise (Tremblay et al., 2015).

- Studies conducted in Australia in 2013 and 2015 reported that 37 to 39% of adults aged 18 to 35 years experienced difficulties hearing or following a conversation in a noisy environment despite the prevalence of hearing loss estimated at only 5% among this population (Gilliver, Beach, and Williams, 2013 and 2015).

These studies from four different countries show that the prevalence of difficulties in speech perception in noise can vary between ~14 and ~60% among people aged 17 to 67 years, although the vast majority of those surveyed in these studies had normal hearing acuity. This suggests that the prevalence of these difficulties is even greater on a global scale if we consider people with a hearing disorder and those over the age of 67 years.

For a refresher on the anatomical concepts related to peripheral hearing, we invite you to consult our previous articles that have covered this subject.

The peripheral auditory system

https://speechneurolab.ca/en/the-peripheral-auditory-system/

Les mécanismes physiopathologiques de la presbyacousie (section 2. Quelques notions anatomiques)

https://speechneurolab.ca/les-mecanismes-physiopathologiques-de-la-presbyacousie

Outer Ear Transfer Function

The first mechanism to intervene in auditory perception is the outer ear. Many of us have wondered why the outer ear has such a peculiar shape. This shape is not simply the result of chance. Indeed, it allows for the amplification of a range of sounds that are particularly useful for recognizing and understanding speech in unfavourable sound environments. This well-documented mechanism in the scientific literature is known as the outer ear transfer function. On average, this function allows for an amplification of 10 to 20 decibels for sounds between 2 and 5 kHz, which corresponds to speech sounds /k/, /t/, /d/, /f/, /v/, /j/, /ch/. However, the amplification of these specific sounds becomes very useful when it comes to distinguishing between phonetically similar words such as “temps” (time) and “dent” (tooth) or “feu” (fire) and “vœux” (vows) in noisy contexts. Note that this transfer function does not amplify or amplifies very little the low-frequency sounds, which is an advantage given that most environmental noises are in the low-frequency range. This function thus favours the audibility of the fine acoustic cues of speech such as consonants.

Instinctively, we know how to take advantage of this outer ear transfer function. Just think of a person who places their open hand behind their ear to act as an additional resonator to target and maximize the audibility of their interlocutor’s voice. Moreover, head movements often allow us to favour this transfer function. By orienting one or the other of our ears towards the sound signal of interest, we maximize the use of this function, which in return allows us to better distinguish speech from ambient noise.

Middle Ear Acoustic Reflex

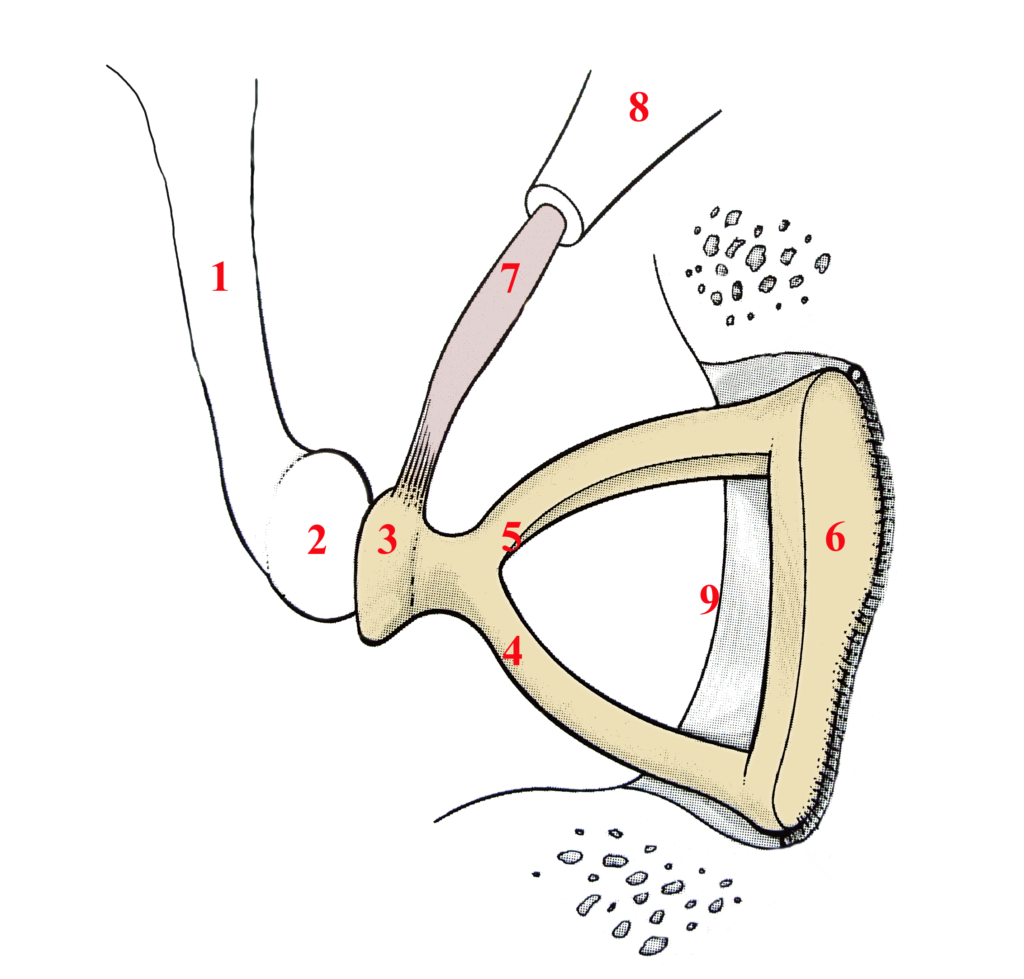

Sometimes called the stapedius reflex, the acoustic reflex of the middle ear’s primary purpose is to protect the cochlea from high sound volumes. Its operation involves partially immobilizing the ossicular chain when a loud sound is detected by a brainstem structure named the superior olivary complex. It is the smallest muscle in the human body—the stapedius muscle—measuring one to two millimetres (Figure 3, number 7) that is responsible for this task. Originating on a small bony promontory named the pyramidal eminence (Figure 3, number 8), and inserted at the level of the head of the stapes (Figure 3, number 3), the contraction of the stapedius muscle prevents sound vibrations from continuing their journey towards the cochlea. As we mentioned earlier, most environmental noises are low-frequency sounds. However, the stapedius reflex is particularly effective at reducing the intensity of low-frequency sounds, which helps to favour the audibility of speech sounds (more specifically the range of consonants) in noisy contexts. This is explained by the fact that the stiffening of the ossicular chain has little attenuating effect on the transmission of high-frequency sounds. However, it should be noted that this muscle, like all muscles in the body, can become fatigued. Thus, in a situation of prolonged exposure to loud sounds, the advantage of the stapedius muscle can temporarily disappear, making the ear more vulnerable to the negative effects of noise.

To learn about how acoustic reflexes are measured, we invite you to consult one of our previous articles that covered this topic.

Audiology and the work of audiologists (see : Acoustic reflexes)

https://speechneurolab.ca/en/audiology-and-the-work-of-audiologists/

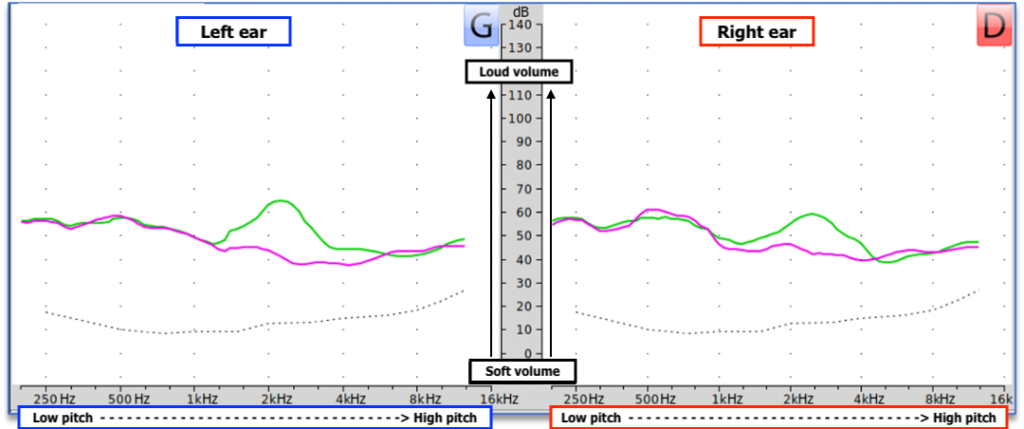

Cochlear Tonotopy

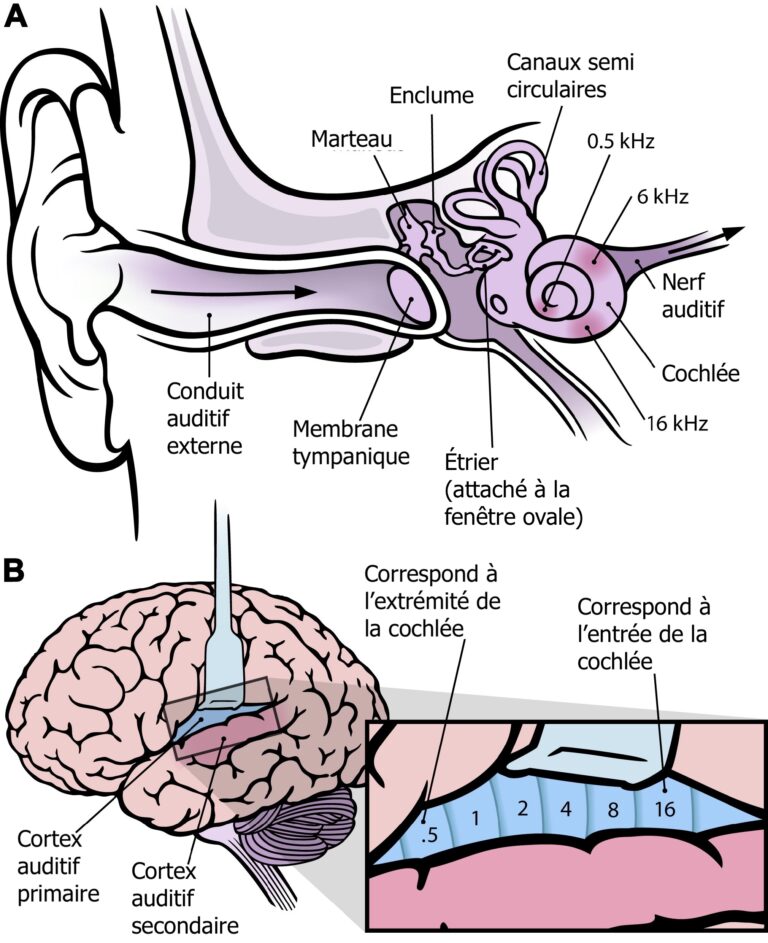

Once the sound waves are transmitted to the cochlea via the tympanic membrane and the ossicular chain, we encounter a third anatomical feature that facilitates speech perception in noise. This is called the tonotopic organization of the cochlea. This term means that each sound we are capable of hearing is captured at a different location along the cochlea based on its frequency (high or low). Indeed, high-frequency sounds are captured by the hair cells at the entrance of the cochlea, while low-frequency sounds are captured by the hair cells at the end of the cochlea. It is as if a series of tiny microphones (the hair cells) with distinct frequency resolutions were arranged along the cochlea, thus allowing a precise capture of the wide range of sounds we encounter in daily life. This tonotopic organization is maintained in all the structures through which the nerve impulses from the cochlea will pass, all the way up to the brain (Figure 4). This tonotopy thus allows us to more easily distinguish all the sounds that arrive at our ears simultaneously.

For more information on tonotopy, we invite you to consult one of our previous articles that covered this topic:

Les mécanismes physiopathologiques de la presbyacousie (section 3 : La tonotopie : un mécanisme physiologique unique)

https://speechneurolab.ca/les-mecanismes-physiopathologiques-de-la-presbyacousie/

Motility of the Outer Hair Cells of the Cochlea

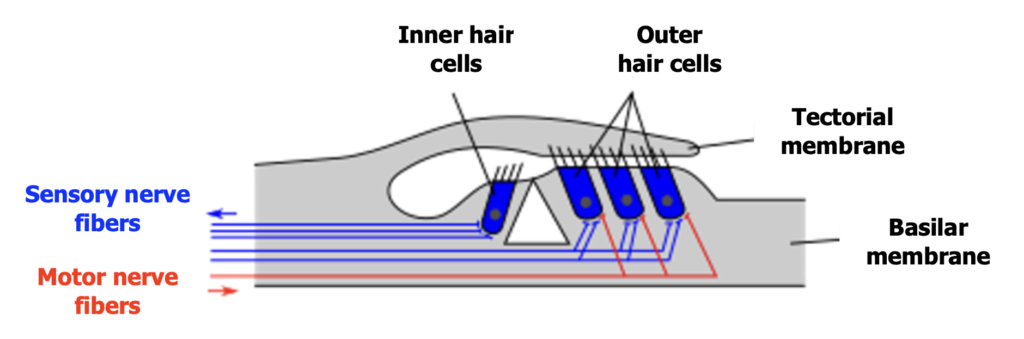

In addition to this tonotopic organization, the outer hair cells, which are extended throughout the cochlea, have a mechanism that favours the amplification of the softer sounds we perceive. This mechanism is called the motility of the outer hair cells. By contracting and relaxing in response to sound signals, these cells increase the mobility of the tectorial membrane, which triggers the action of inner hair cells, responsible for transmitting the nerve impulse into the auditory nerve. This amplification is selective because it is less significant for loud sounds that reach our ears. This motility thus increases our chances of perceiving speech when we are in a noisy environment. Note that this mechanism occurs in the form of a reflex (olivocochlear reflex) controlled by a brainstem area named the superior olivary complex. It is from this superior olivary complex that the motor nerve fibres (identified in red in Figure 5) responsible for the motility of the outer hair cells are activated.

To see an outer hair cell in full motility, click here!

https://www.youtube.com/watch?v=pij8a8aNpWQ&ab_channel=sixesfullofnines

Auditory Nerve Fibres

When the mixture of speech and noise passes through the structures of the outer, middle, and inner ear, it is the nerve fibres that attach the inner hair cells of the cochlea to the auditory nerve that come into play (identified in blue in Figure 5). Each inner hair cell is attached to three categories of nerve fibres with specific preferences for the information they transmit. Therefore, there are fibres that will respectively become active in the presence of soft, medium, and loud sounds. The fibres activated in the presence of a high volume are the most useful for the perception of speech in noise. These fibres have the particularity of being insensitive to continuous noise. However, this insensitivity does not mean that they completely ignore the sound environment. On the contrary, their specialization in transmitting loud sound information allows them to focus on speech signals that emerge above the level of ambient noise. Being particularly sensitive to variations in intensity in noisy environments, these fibres are especially adapted to grasp the sound contrasts inherent in speech.

Conclusion

In a world where noise is an integral part of our daily environment, understanding speech amidst these sound interferences represents a constant challenge. In this post, we presented the mechanisms of the peripheral auditory system that play a facilitative role in our ability to perceive speech in noisy conditions. Whether it is thanks to the outer ear transfer function that amplifies speech sounds, the middle ear acoustic reflex that reduces the impact of harmful sounds, the cochlea’s tonotopy that distinguishes sounds by their pitch, the motility of the outer hair cells that amplifies the softest sounds, or thanks to specific fibres of the auditory nerve, each of these mechanisms contributes to our ability to communicate effectively despite the presence of ambient noise!

In the second post of this series, we will address the central mechanisms that contribute to the perception of speech in noise.

Bibliography

Ashmore, J. (2008). Cochlear outer hair cell motility. Physiological Reviews, 88, 173-210. https://doi.org/10.1152/physrev.00044.2006

Davis, A. C. (1989). The prevalence of hearing impairment and reported hearing disability among adults in Great Britain. International Journal of Epidemiology, 18 (4), 911-917. https://doi.org/10.1093/ije/18.4.911

Gilliver, M., Beach, E. F., & Williams, W. (2013). Noise with attitude : Influences on young people’s decisions to protect their hearing. International Journal of Audiology, 52 (sup1), S26-S32. https://doi.org/10.3109/14992027.2012.743049

Gilliver, M., Beach, E. F., & Williams, W. (2015). Changing beliefs about leisure noise: Using health promotion models to investigate young people’s engagement with, and attitudes towards, hearing health. International Journal of Audiology, 54 (4), 211-219. https://doi.org/10.3109/14992027.2014.978905

Hannula, S., Bloigu, R., Majamaa, K., Sorri, M., & Mäki-Torkko, E. (2011). Self-reported hearing problems among older adults: Prevalence and comparison to measured hearing impairment. Journal of the American Academy of Audiology, 22 (8), 550-559. https://doi.org/10.3766/jaaa.22.8.7

Kawase, T., Delgutte, B., & Liberman, M. C. (1993). Antimasking effects of the olivocochlear reflex. II. Enhancement of auditory-nerve response to masked tones. Journal of Neurophysiology, 70, 2533-2549. https://doi.org/10.1152/jn.1993.70.6.2533

Liberman, M. C., & Kiang, N. Y. (1984). Single-neuron labeling and chronic pathology. IV. Stereocilia damage and alterations in rate and phase level functions. Hearing Research, 16, 75-90. https://doi.org/10.1016/0378-5955(84)90026-1

Pang, J., Beach, E. F., Gilliver, M., & Yeend, I. (2019). Adults who report difficulty hearing speech in noise: An exploration of experiences, impacts and coping strategies. International Journal of Audiology, 58 (12), 851-860. https://doi.org/10.1080/14992027.2019.1670363

Tremblay, K. L., Pinto, A., Fischer, M. E., Klein, B. E. K., Klein, R., Levy, S., Tweed, T. S., & Cruickshanks, K. J. (2015). Self-reported hearing difficulties among adults with normal audiograms: The Beaver Dam Offspring Study. Ear and Hearing, 36 (6), e290. https://doi.org/10.1097/AUD.0000000000000195

Zheng, Y., & Guan, J. (2018). Cochlear synaptopathy: A review of hidden hearing loss. Journal of Otorhinolaryngology Disorders & Treatment, 1 (1). https://dx.doi.org/10.16966/jodt.105

Suggested readings

- Being Deaf or hard of hearing

- Audiology and the work of audiologists

- New scientific article on audiovisual integration

- The auditory cortex

- The central subcortical auditory system

- The peripheral auditory system

- The cocktail party explained

- Can musical practice improve listening to conversations in noise?

- Speech perception: a complex ability

- Action potential

- Synaptic transmission